Style Change Detection 2018

Synopsis

- Task: Given a document, determine whether it contains style changes or not.

- Input: [data]

- Evaluation: [code]

- Submission: [submit]

Introduction

While many approaches target the problem of identifying authors of whole documents, research on investigating multi-authored documents is sparse. In the last two PAN editions we therefore aimed to narrow the gap by at first proposing a task to cluster by authors inside documents (Author Diarization, 2016). Relaxing the problem, the follow-up task (Style Breach Detection, 2017) focused only on identifying style breaches, i.e., to find text positions where the authorship and thus the style changes. Nevertheless, the results of participants revealed relatively low accuracies and indicated that this task is still too hard to tackle.

Consequently, this year we propose a substantially simplified task, while still being a continuation of last year's task: The only question that should be answered by participants is whether there exists a style change in a given document or not. Further, we changed the name to Style Change Detection, in order to reflect the task more intuitively.

Given a document, participants thus should apply intrinsic analyses to decide if the document is written by one or more authors, i.e., if there exist style changes. While the precedent task demanded to specifically locate the exact position of such changes, this year we only ask for a binary answer per document:-

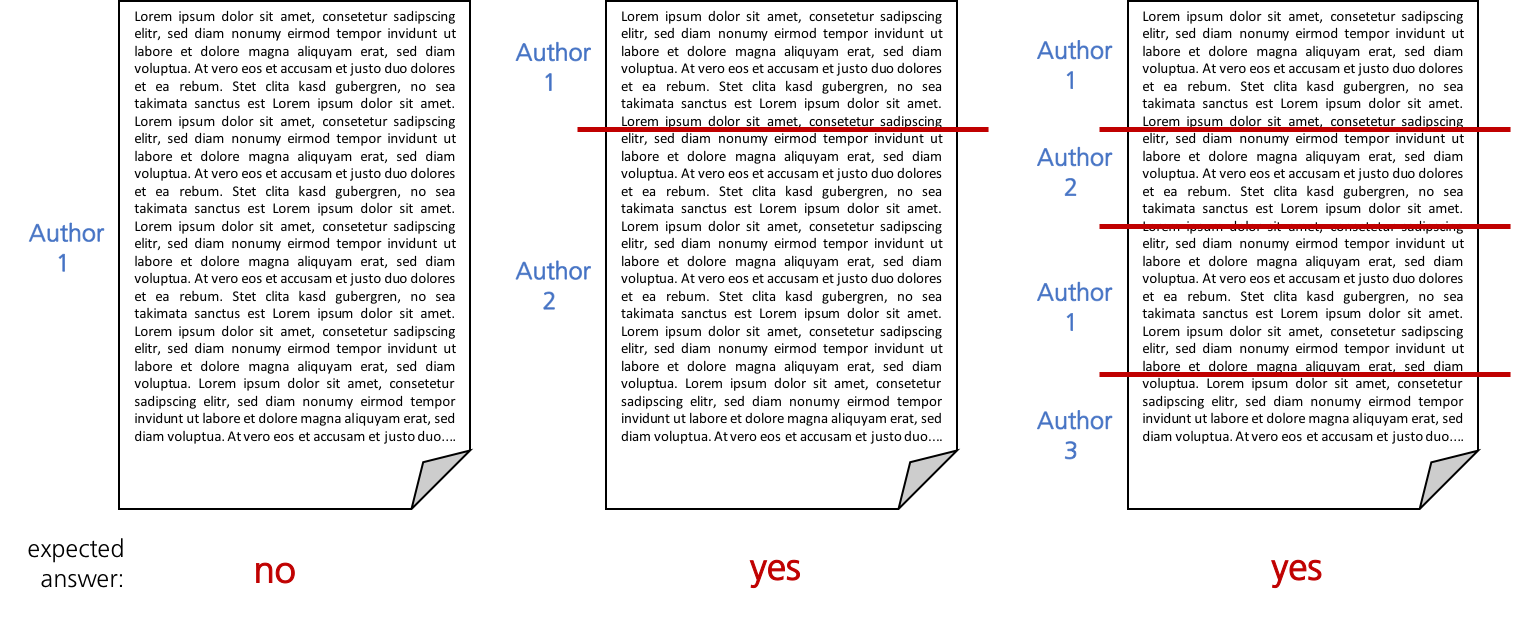

yes: the document contains at least one style change (is written by at least two authors) no: the document has no style changes (is written by a single author)

In this sense it is irrelevant to identify the number of style changes, the specific positions,

or to build clusters of authors. You may adapt existing algorithms other problem types such as

intrinsic plagiarism detection or text segmentation. For example, if you

already have an intrinsic plagiarism detection system, you can apply your method on this task by outputting

yes if you

found a plagiarism case or no otherwise (please note that intrinsic plagiarism

detection methods may need adaptions as they naturally are not designed to handle uniformly distributed

author texts).

The following figure illustrates some possible scenarios and the expected output:

Task

Given a document, determine whether it contains style changes or not, i.e., if it was written by a single or multiple authors.

All documents are provided in English and may contain zero up to arbitrarily many style changes.

Development Phase

To develop your algorithms, a data set including corresponding solutions is provided:

- a training set: contains 50% of the whole dataset and includes solutions. Use this set to feed/train your models.

- a validation set: contains 25% of the whole dataset and includes solutions. Use this set to evaluate and optimize your models.

- a test set: contains 25% of the whole dataset and does not include solutions. This set is used for evaluation (see later).

The whole data set is based on user posts from various sites of the StackExchange network, covering different topics and containing approximately 300 to 1000 tokens per document. Moreover and for your convenience, within the training/validation data set the exact locations of all style changes are provided, as they may be helpful to develop your algorithms.

Additionally, we provide detailed meta data about each problem of all data sets, including topics/subtopics, author distributions or average segment lengths.

For each problem instance X, two files are provided:

problem-X.txtcontains the actual textproblem-X.truthcontains the ground truth, i.e., the correct solution in JSON format:{ "changes": true/false, "positions": [ character_position_change_1, character_position_change_2, ... ] }If present, the absolute character positions of the first non-whitespace character of the new segment is provided in the solution (

positions). Please note that this information is only for development purposes and not used for the evaluation.

Evaluation Phase

Once you finished tuning your approach to achieve satisfying performance on the training corpus, your software will be tested on the evaluation corpus (test data set). You can expect the test data set to be similar to the validation data set, i.e., also based on StackExchange user posts and of similar size as the validation set. During the competition, the evaluation corpus will not be released publicly. Instead, we ask you to submit your software for evaluation at our site as described below. After the competition, the evaluation corpus will become available including ground truth data. This way, you have all the necessities to evaluate your approach on your own, yet being comparable to those who took part in the competition.

Output

In general, the data structure during the evaluation phase will be similar to that in the

training phase, with the exception that the ground truth files are missing. Thus, for each given problem

problem-X.txt your software should output the missing solution file

problem-X.truth.

The output should be a JSON object containing of a single property:

{

"changes": true/false

}Output "changes" : true if there are style changes in the document, and "changes"

:

false otherwise.

Performance Measures

The performance of the approaches will simply be measured and ranked by computing the accuracy.

It takes three parameters: an input directory (the data set), an inputRun directory (your

computed predictions) and an output directory where the results file is written to. In addition

to the

accuracyachieved over the whole input directory, also an

accuracy_solved is computed that

considers only solved problem instances (i.e., if you only solved two problem instances and were

correct both times, the accuracy_solved would be 100%). Please note that this

measure is only for developing purposes and that accuracy over all items will be

used for the final evaluation.

Submission

We ask you to prepare your software so that it can be executed via command line calls. The command shall take as input (i) an absolute path to the directory of the evaluation corpus and (ii) an absolute path to an empty output directory:

mySoftware -i EVALUATION-DIRECTORY -o OUTPUT-DIRECTORYWithin EVALUATION-DIRECTORY, you will find a list of problem instances, i.e., [filename].txtfiles.

For each problem instance you should produce the solution file [filename].truth in

theOUTPUT-DIRECTORY For instance, you

readEVALUATION-DIRECTORY/problem-12.txt,

process it and write your results to OUTPUT-DIRECTORY/problem-12.truth.

You can choose freely among the available programming languages and among the operating systems Microsoft Windows and Ubuntu. We will ask you to deploy your software onto a virtual machine that will be made accessible to you after registration. You will be able to reach the virtual machine via ssh and via remote desktop. More information about how to access the virtual machines can be found in the PAN Virtual Machine User Guide.

Once deployed in your virtual machine, we ask you to access TIRA at www.tira.io, where you can self-evaluate your software on the test data.

Note: By submitting your software you retain full copyrights. You agree to grant us usage rights only for the purpose of the PAN competition. We agree not to share your software with a third party or use it for other purposes than the PAN competition.

Related Work

- Style Breach Detection, PAN@CLEF'17

- Author Clustering, PAN@CLEF'16

- Marti A. Hearst. TextTiling: Segmenting Text into Multi-paragraph Subtopic Passages.. In Computational Linguistics, Volume 23, Issue 1, pages 33-64, 1997.

- Benno Stein, Nedim Lipka and Peter Prettenhofer. Intrinsic Plagiarism Analysis. In Language Resources and Evaluation, Volume 45, Issue 1, pages 63–82, 2011.

- Patrick Juola. Authorship Attribution. In Foundations and Trends in Information Retrieval, Volume 1, Issue 3, March 2008.

- Efstathios Stamatatos. A Survey of Modern Authorship Attribution Methods. Journal of the American Society for Information Science and Technology, Volume 60, Issue 3, pages 538-556, March 2009.